.

Apache Airflow

A platform to programmatically author, schedule and monitor workflows

🚀 About

In this HashiQube DevOps lab, you'll get hands-on experience with Apache Airflow. You'll learn how to:

- Install Airflow with Helm charts

- Run Airflow on Kubernetes using Minikube

- Configure Airflow connections

- Create a Python DAG that runs DBT

This lab provides an incredible learning opportunity to understand how Airflow can orchestrate your data pipelines. Be sure to check out the DBT section as well!

📋 Provision

bash docker/docker.sh

bash database/postgresql.sh

bash minikube/minikube.sh

bash dbt/dbt.sh

bash apache-airflow/apache-airflow.shvagrant up --provision-with basetools,docker,docsify,postgresql,minikube,dbt,apache-airflowdocker compose exec hashiqube /bin/bash

bash bashiqube/basetools.sh

bash docker/docker.sh

bash docsify/docsify.sh

bash database/postgresql.sh

bash minikube/minikube.sh

bash dbt/dbt.sh

bash apache-airflow/apache-airflow.sh🔑 Web UI Access

To access the Airflow web UI:

Default Login:

Username: admin Password: admin

🧩 Architecture

Airflow is deployed on Minikube (Kubernetes) using Helm, with custom configurations provided in the values.yaml file. The architecture includes:

- Airflow scheduler running in a Kubernetes pod

- Example DAGs mounted into the scheduler pod

- PostgreSQL database for Airflow metadata

📊 Example DAGs

In the dags folder, you'll find two example DAGs:

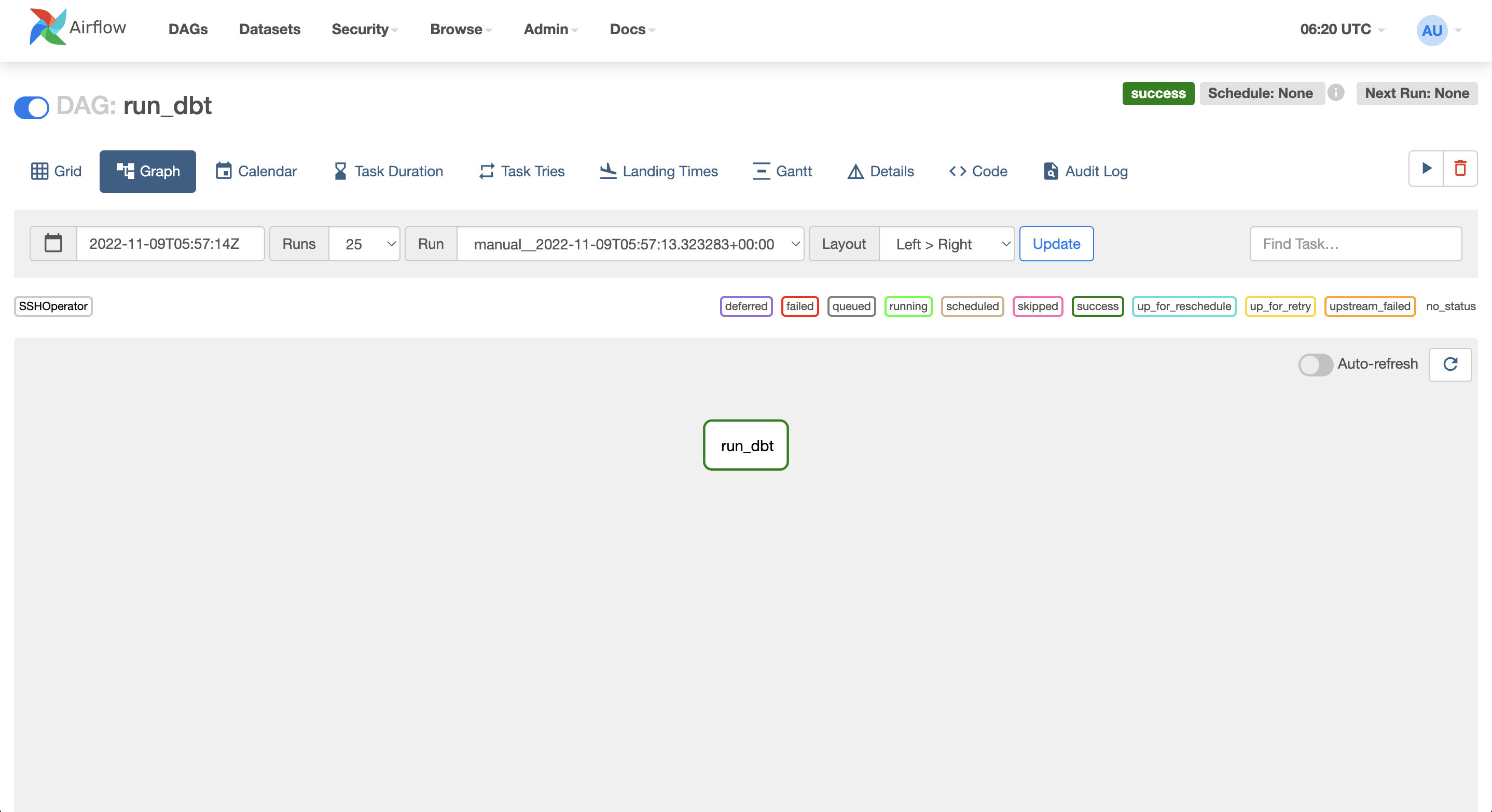

example-dag.py

- Runs DBT commands using the SSHOperator

- Connects to Hashiqube via SSH to execute data transformation tasks

test-ssh.py

- A simple DAG that tests the SSH connection to Hashiqube

- Useful for verifying connectivity before running more complex workflows

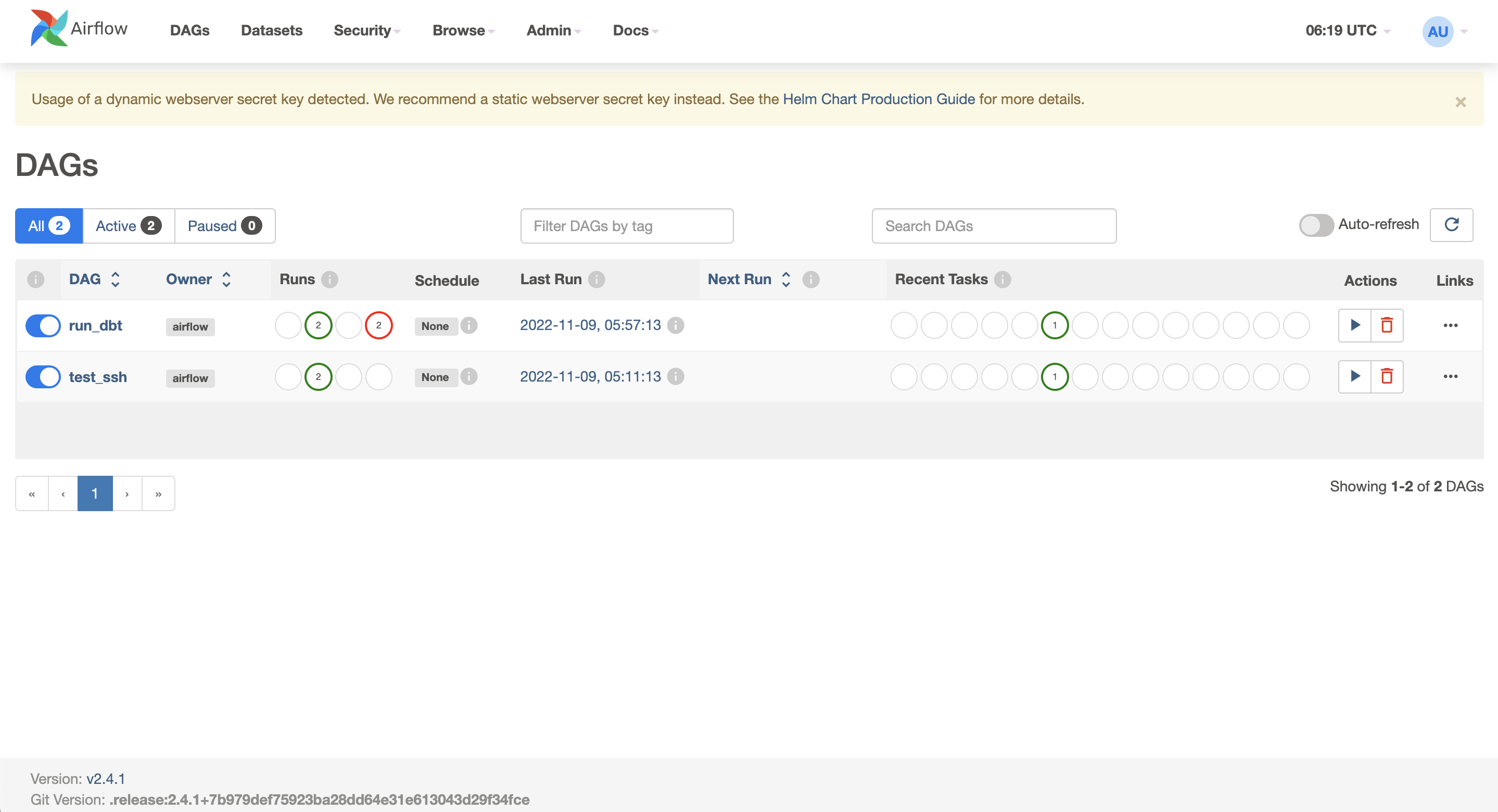

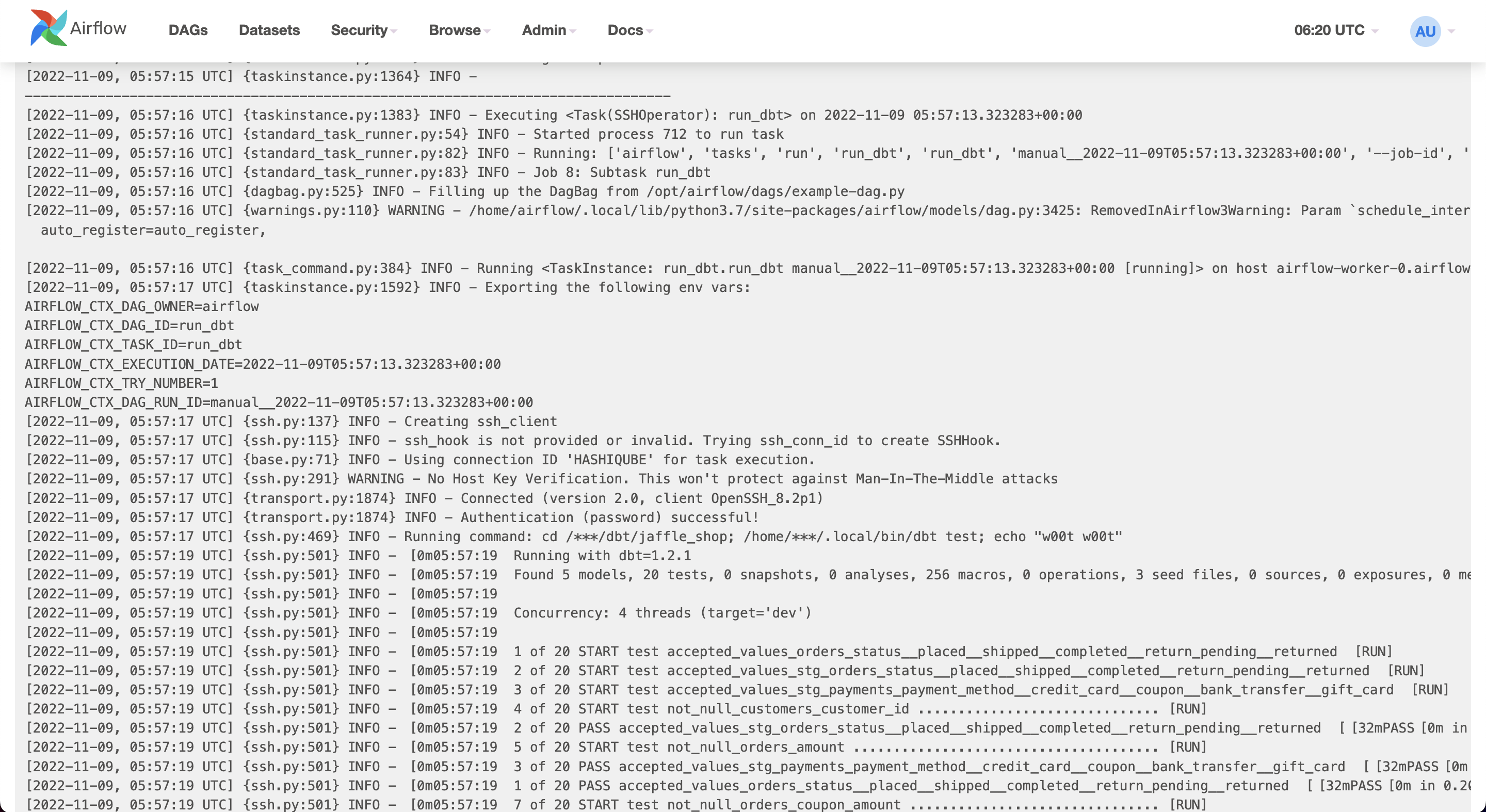

📸 Airflow Dashboard

DAGs list in the Airflow UI

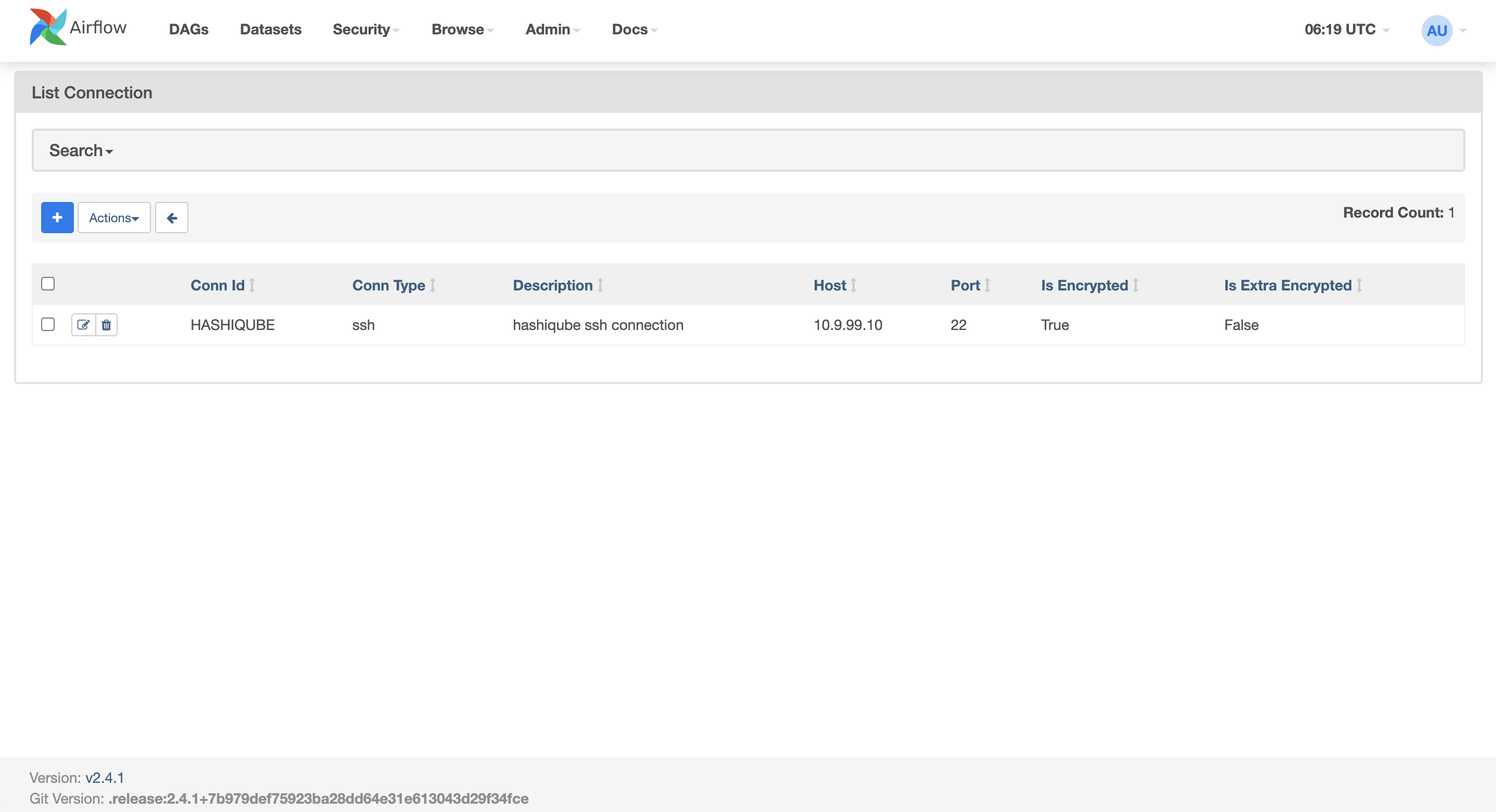

Configured connections in Airflow

Execution graph of a DBT DAG run

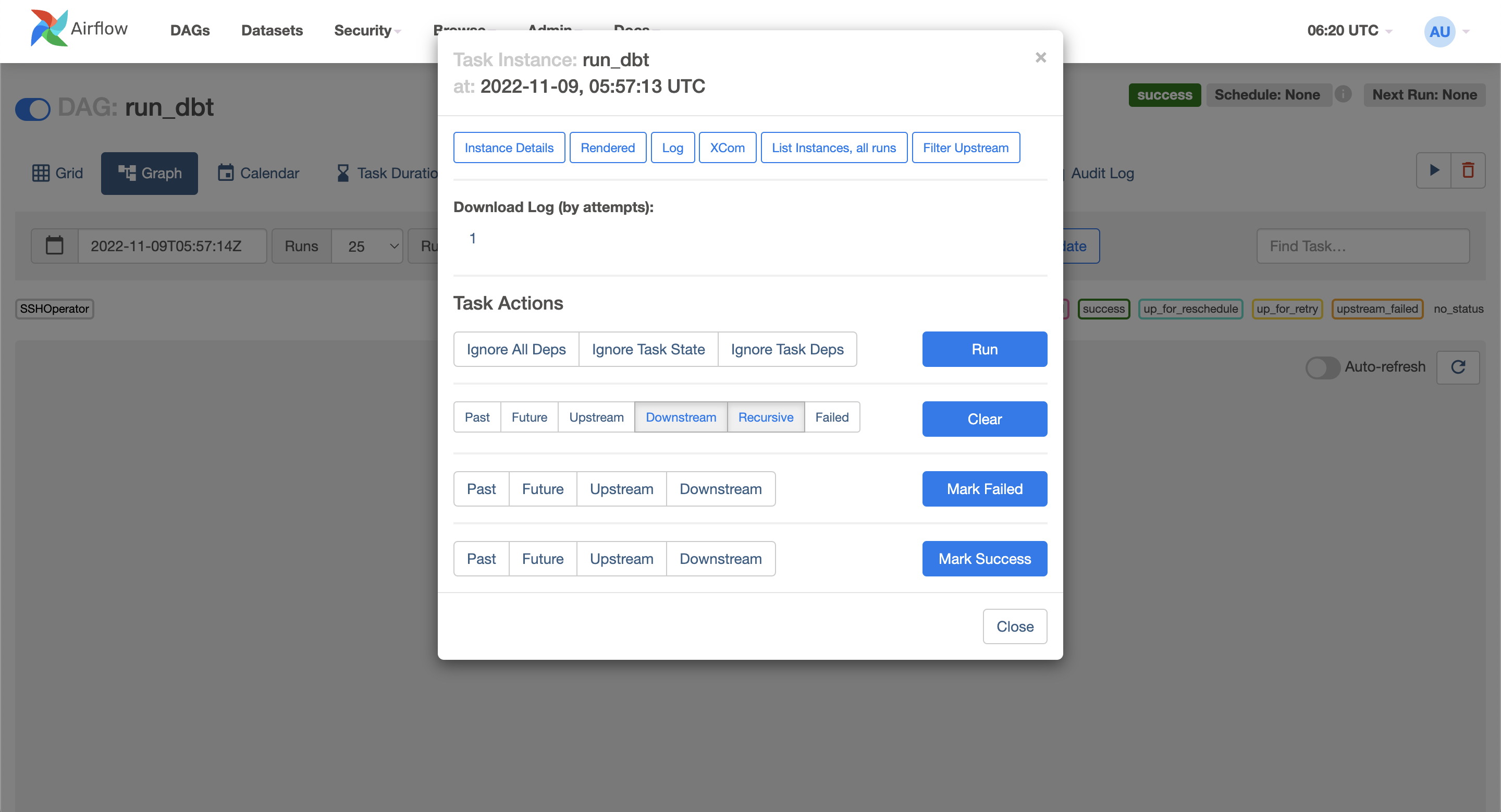

Task instance details

Task execution results

🔍 Code Examples

Provisioner Script

The following script automates the Airflow deployment:

#!/bin/bash

# Print the commands that are run

set -x

# Stop execution if something fails

# set -e

# This script installs Airflow on minikube

# https://github.com/hashicorp/vagrant/issues/8878

# https://github.com/apache/airflow

# https://airflow.apache.org/docs/helm-chart/stable/index.html

# ... rest of provisioner scriptExample DAG

Here's a sample DAG that runs DBT tasks:

"""

This DAG runs dbt models on Hashiqube

"""

import os

from datetime import datetime, timedelta

from airflow import DAG

from airflow.contrib.operators.ssh_operator import SSHOperator

from airflow.operators.dummy_operator import DummyOperator

default_args = {

'owner': 'airflow',

'depends_on_past': False,

'email': ['[email protected]'],

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

'retry_delay': timedelta(minutes=5),

}

# ... rest of DAG code🔗 Additional Resources

- Apache Airflow Official Website

- Airflow Helm Chart on Artifact Hub

- Airflow Helm Chart Documentation

- Adding Connections and Variables

- Airflow 1.10.2 Documentation (PDF)

- Helm Chart Parameters Reference

#!/bin/bash

# https://airflow.apache.org/docs/apache-airflow/stable/installation/index.html

# https://airflow.apache.org/docs/helm-chart/stable/index.html

# https://github.com/apache/airflow/tree/main/chart

# https://github.com/apache/airflow/blob/main/chart/values.yaml

# https://github.com/airflow-helm/charts/blob/main/charts/airflow/docs/guides/quickstart.md

# https://airflow.apache.org/docs/helm-chart/stable/adding-connections-and-variables.html

# https://airflow.readthedocs.io/_/downloads/en/1.10.2/pdf/

# https://airflow.apache.org/docs/helm-chart/stable/parameters-ref.html

# https://artifacthub.io/packages/helm/airflow-helm/airflow/

cd ~/

# Determine CPU Architecture

arch=$(lscpu | grep "Architecture" | awk '{print $NF}')

if [[ $arch == x86_64* ]]; then

ARCH="amd64"

elif [[ $arch == aarch64 ]]; then

ARCH="arm64"

fi

echo -e '\e[38;5;198m'"CPU is $ARCH"

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ Cleanup"

echo -e '\e[38;5;198m'"++++ "

for i in $(ps aux | grep kubectl | grep -ve sudo -ve grep -ve bin | grep -e airflow | tr -s " " | cut -d " " -f2); do kill -9 $i; done

sudo --preserve-env=PATH -u vagrant helm delete airflow --namespace airflow

sudo --preserve-env=PATH -u vagrant kubectl delete -f /vagrant/apache-airflow/airflow-dag-pvc.yaml

sudo --preserve-env=PATH -u vagrant kubectl delete namespace airflow

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ Create Namespace airflow for Airflow"

echo -e '\e[38;5;198m'"++++ "

sudo --preserve-env=PATH -u vagrant kubectl create namespace airflow

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ Create PVC for Airflow DAGs in /vagrant/apache-airflow/dags"

echo -e '\e[38;5;198m'"++++ "

sudo --preserve-env=PATH -u vagrant kubectl apply -f /vagrant/apache-airflow/airflow-dag-pvc.yaml

# Install with helm

# https://airflow.apache.org/docs/helm-chart/stable/index.html

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ Installing Apache Airflow using Helm Chart in namespace airflow"

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ helm repo add apache-airflow https://airflow.apache.org"

echo -e '\e[38;5;198m'"++++ "

sudo --preserve-env=PATH -u vagrant helm repo add apache-airflow https://airflow.apache.org

sudo --preserve-env=PATH -u vagrant helm repo update

# https://github.com/airflow-helm/charts/blob/main/charts/airflow/docs/guides/quickstart.md

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ helm install airflow apache-airflow/airflow"

echo -e '\e[38;5;198m'"++++ "

sudo --preserve-env=PATH -u vagrant helm upgrade --install airflow apache-airflow/airflow --namespace airflow --create-namespace \

--values /vagrant/apache-airflow/values.yaml \

--set dags.persistence.enabled=true \

--set dags.persistence.existingClaim=airflow-dags \

--set dags.gitSync.enabled=false

attempts=0

max_attempts=15

while ! ( sudo --preserve-env=PATH -u vagrant kubectl get pods --namespace airflow | grep web | tr -s " " | cut -d " " -f3 | grep Running ) && (( $attempts < $max_attempts )); do

attempts=$((attempts+1))

sleep 60;

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ Waiting for Apache Airflow to become available, (${attempts}/${max_attempts}) sleep 60s"

echo -e '\e[38;5;198m'"++++ "

sudo --preserve-env=PATH -u vagrant kubectl get po --namespace airflow

sudo --preserve-env=PATH -u vagrant kubectl get events | grep -e Memory -e OOM

done

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ kubectl port-forward 18889:8080"

echo -e '\e[38;5;198m'"++++ "

attempts=0

max_attempts=15

while ! ( sudo netstat -nlp | grep 18889 ) && (( $attempts < $max_attempts )); do

attempts=$((attempts+1))

sleep 60;

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ kubectl port-forward service/airflow-webserver 18889:8080 --namespace airflow --address=\"0.0.0.0\", (${attempts}/${max_attempts}) sleep 60s"

echo -e '\e[38;5;198m'"++++ "

sudo --preserve-env=PATH -u vagrant kubectl port-forward service/airflow-webserver 18889:8080 --namespace airflow --address="0.0.0.0" > /dev/null 2>&1 &

done

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ Add SSH Connection for Hashiqube"

echo -e '\e[38;5;198m'"++++ "

kubectl exec airflow-worker-0 -n airflow -- /bin/bash -c '/home/airflow/.local/bin/airflow connections add HASHIQUBE --conn-description "hashiqube ssh connection" --conn-host "10.9.99.10" --conn-login "vagrant" --conn-password "vagrant" --conn-port "22" --conn-type "ssh"'

echo -e '\e[38;5;198m'"++++ "

echo -e '\e[38;5;198m'"++++ Docker stats"

echo -e '\e[38;5;198m'"++++ "

sudo --preserve-env=PATH -u vagrant docker stats --no-stream -a

echo -e '\e[38;5;198m'"++++ Apache Airflow Web UI: http://localhost:18889"

echo -e '\e[38;5;198m'"++++ Username: admin; Password: admin"